Hello Guys, here goes another tutorial from my side, today I was building an application, and there I had to read and write from Nodejs.

After a couple of minutes, I quickly come up with a solution thanks to Github Copilot. Well, let's get started on this tutorial.

Reading writing to a CSV file using a Nodejs

Building a Node.js application

Let's go ahead and build a simple NodeJs application using npm. If you don't already have Nodejs installed in your system please go ahead and do that.

npm init --y

--y is an optional flag to start with the default configuration. Once that is done you can put any CSV file you want to read from or write to.

Reading from CSV file

First, of all let's look at how we can read the data and parse it in the way we want that, I will be trying only to parse the data in form of the object. Shall we get started?

1. Without Using a npm package and Creating your own CSV parser

// Importing fs module to read the file

const fs = require("fs")

const data = fs.readFileSync("sample.csv", "utf8") // using 'utf8' encoding

// splitting the CSV by row as "\n" represents new row

let rows = data.split("\n")

// Getting the attribute from the index 0 of the rows

const getAttribute = () => {

return rows[0].split(',')

}

// Further splitting the Rows

const getRowData = () => {

let rowData = []

for (let i = 1; i < rows.length; i++) {

const row = rows[i].split(',')

rowData.push(row)

}

return rowData;

}

// The CSV parser

const CSVToObject = () => {

const attributes = getAttribute(data)

const rowData = getRowData()

let rowsObj = []

for (let i = 0; i < rowData.length; i++) {

let rowObj = {}

for (let j = 0; j < rowData[i].length; j++) {

rowObj[attributes[j]] = rowData[i][j]; // converting to object as attribute as key.

}

rowsObj.push(rowObj)

}

return rowsObj

}

let newData = CSVToObject()

console.log(newData) // Output of the data

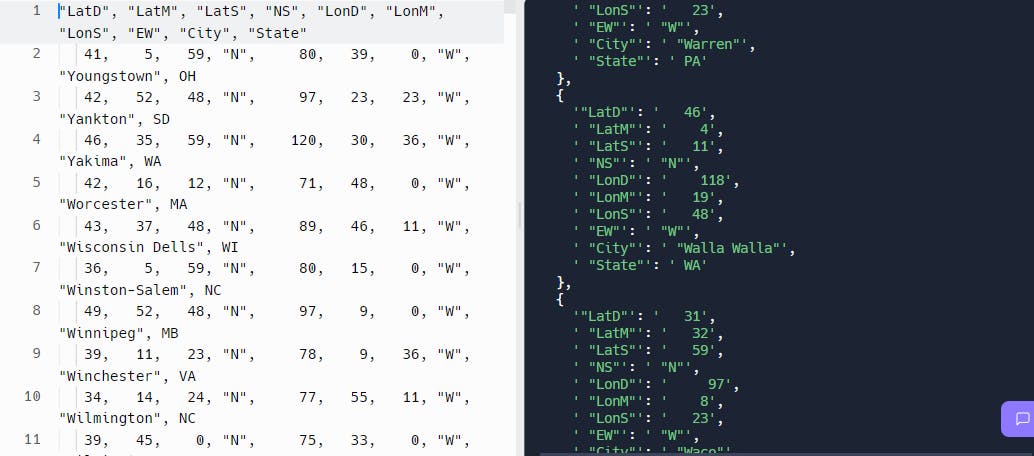

In the above code, we are just taking a CSV file reading it, and then splitting it into rows by using the logic that each row is distinguished by "\n". Once that is done we are storing the first row or the 0th index of the array as attributed used in the CSV. Once that is done we are then splitting the rows further by , by using the fact each column in a row is split by ,.

here are the results,

2. Using 3rd Party Library Like Fast CSV.

Well, if you are not into much programming or you don't have much time to write the logic on your own you can use the npm library like Fast-CSV. It has all the features out of the box and can be easily used with Nodejs.

You can read more about it here.

npm install fast-csv

//or

yarn add fast-csv

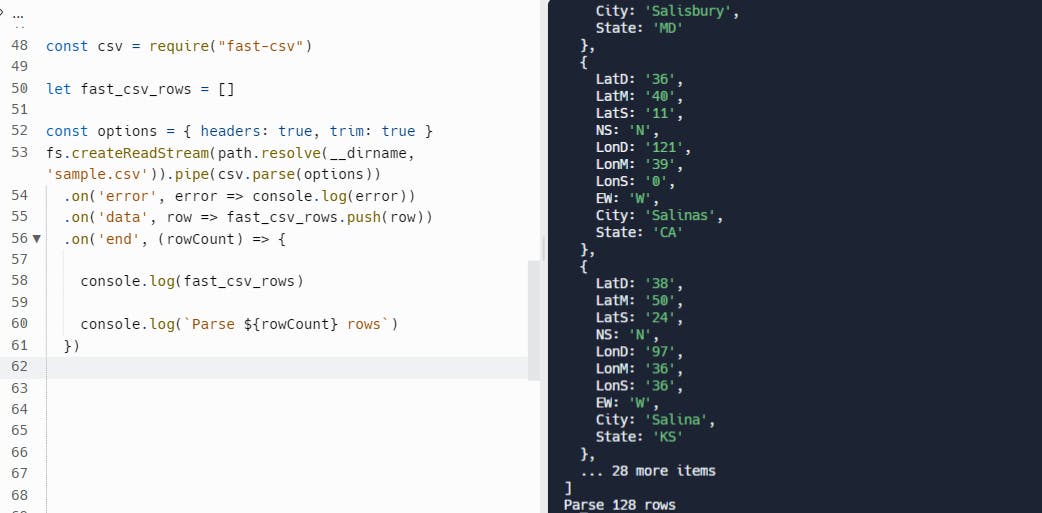

once done we will write further code which just consoles logs each row in a format of object

const csv = require("fast-csv") // Importing the Librabry

// options which needed for the fast-csv

const options = { headers: true, trim: true }

fs.createReadStream(path.resolve(__dirname, 'sample.csv'))

.pipe(csv.parse(options))

.on('error', error => console.log(error))

.on('data', row => console.log(row))

.on('end', (rowCount) => console.log(`Parse ${rowCount} rows`))

further, you can save them as an array of objects by adding a small line of code.

Here are the result :)

Writing to a CSV file

In this, we will be simply writing a line into a CSV file, and then we will use fast-CSV to achieve the same, logic is similar.

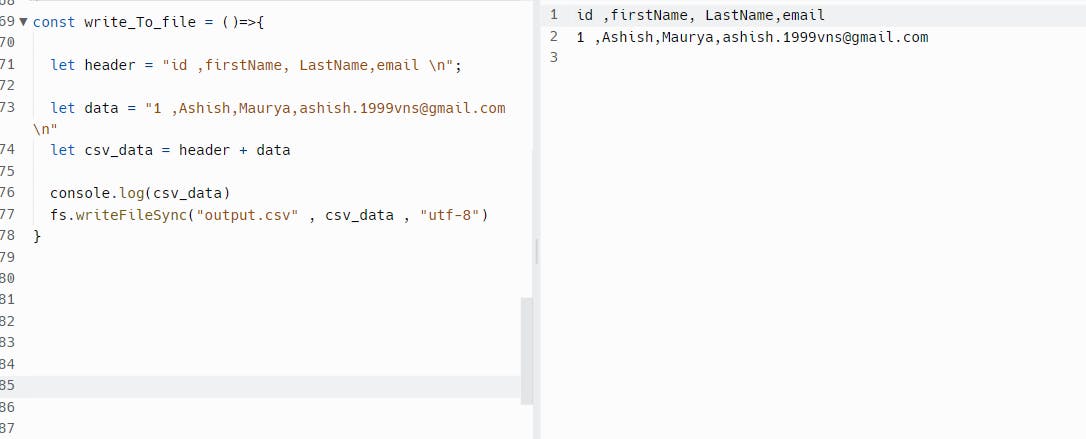

1. Creating your method to write to file

const writeTofile = ()=>{

let header = "id ,firstName, LastName,email \n";

let data = "1 ,Ashish,Maurya,ashish.1999vns@gmail.com \n"

let csv_data = header + data

console.log(csv_data)

fs.writeFileSync("output.csv" , csv_data , "utf-8")

}

writeTofile()

Output :

The End

I hope you learned something new and would love to use these. If you find this meaning full consider following me on Twitter.